Advertisement

At Nvidia GTC 2025, GM quietly made one of its most aggressive moves toward full automation, without loud marketing or futuristic buzzwords. Instead, it brought a clear message: Nvidia's AI platforms are becoming central to how GM wants to run its factories, train robots, and develop self-driving cars. After years of fragmented approaches and some stalled pilots, GM is now leaning into a unified AI strategy with Nvidia at the core.

This shift wasn't just about hype. GTC 2025 highlighted real-world rollouts—autonomous driving simulations that reduce on-road testing, AI-powered robotics trained in virtual environments, and factories beginning to run predictive systems that flag errors before human supervisors ever spot them. GM isn't alone, but it's going all in on Nvidia AI across almost every layer of its operations. The question is no longer whether AI will touch the future of car making. It's about who can do it well and fast enough to stay in the game.

GM's factory network is one of the largest and most complex in the world, making it an ideal testbed for AI-driven automation. What's new is how Nvidia's AI stack—especially the Isaac robotics platform and Omniverse simulation tools—is helping GM retrain its old factories for more intelligent output without tearing everything down.

Instead of building brand-new smart factories from scratch, GM is simulating robotics operations inside Omniverse. This allows the company to design and test robotic processes virtually, reducing downtime on real floors. It’s already using this method to improve repetitive assembly tasks, like battery pack installation for EVs, where minor adjustments in alignment can cause big delays. In the past, fixing this would’ve taken days of trial-and-error on-site. Now, adjustments are made in digital twins of the factory, and results are applied with minimal disruption.

Isaac, Nvidia’s robotics platform, allows GM to train robot arms to handle delicate tasks with better accuracy. These aren’t humanoid machines—they’re task-specific bots handling welding, torque checks, and other roles with improved consistency. Importantly, the training is done mostly in simulation. The result is faster deployment, fewer workplace injuries, and tighter quality control.

The use of Nvidia AI here isn’t just about cost reduction. It’s about finding patterns. With predictive analytics, GM can now identify components at risk of failure before assembly even starts. Sensors feed into machine learning models that spot deviations long before a human would. It’s slow, steady progress—but the long-term shift is a smarter, less wasteful production line.

GM’s self-driving division, Cruise, had a rough year with operational pauses and public backlash. But at GTC 2025, GM made it clear it’s not walking away from autonomy—it’s just changing how it gets there. Nvidia’s Drive platform is becoming central to this recalibration.

The Drive Hyperion platform, which handles everything from sensor fusion to neural network inference, is now being integrated more directly into GM’s new EV architecture. That means the hardware, software, and vehicle design are all being coordinated from the start. GM’s pitch isn’t full robo-taxis anymore. Instead, it’s focused on partial autonomy for specific use cases: factory logistics, closed-loop delivery systems, and long-haul trucking routes.

Nvidia AI allows GM to simulate millions of miles of road scenarios without putting a car on the street. These are not just CGI visuals. These are data-heavy simulations that test edge cases, weather patterns, and rare interactions between humans and machines. The level of detail allows engineers to retrain AI models faster and with higher accuracy, which has been one of the biggest bottlenecks in autonomous driving.

Nvidia’s real advantage here is time compression. What once took months of field testing can now be run overnight in Omniverse. GM can crash-test new safety features, simulate pedestrian behavior, and reprogram driving logic across entire vehicle fleets in a matter of days.

While full autonomy remains far off, this system gives GM a better foundation. It means software updates will become smarter over time, responding to real-world data pulled from Nvidia’s AI tools. GM is not chasing headlines with robotaxis—it’s building toward a gradual, reliable rollout of smart-driving systems that work in the background.

It's tempting to think of robots as shiny, human-like beings walking around a plant. However, that's not what GM is doing with Nvidia. The company is rolling out industrial robots—think of smart forklifts, welding arms, mobile assistants—that are powered by real-time machine learning from Nvidia's platforms. These robots don't just follow pre-written commands. They adapt.

For example, when supply chain disruptions change the location of parts within a warehouse, these mobile robots re-learn the layout using vision systems and object recognition, all supported by Nvidia AI chips. They’re not blindly following tracks or QR codes. They’re identifying shelving changes, calculating new paths, and alerting humans when something doesn’t make sense. The same goes for torque-sensitive tasks. Instead of applying a fixed pressure, the system now learns how different bolts or materials respond and adjusts accordingly. That level of finesse was almost impossible without AI.

This approach matters because it’s practical. GM isn’t trying to “replace” workers. These robots are handling dangerous, error-prone jobs where fatigue or misalignment could lead to injuries or defects. The smarter the robots get, the more they can help humans focus on high-skill tasks, and that seems to be GM’s long-term goal—cooperative automation, not just full replacement.

The partnership with Nvidia also brings flexibility. If GM shifts production from combustion engines to EVs or battery packs, it can simulate new processes with minimal lead time. That adaptability means faster pivoting across product lines, especially important as EV demand and regulations keep shifting.

GM's Nvidia AI strategy focuses on practical, scalable automation rather than flashy demos. At GTC 2025, it highlighted real systems already in use—robotic torque arms, predictive maintenance, and driving simulations—built on Nvidia's platform. GM isn't replacing its legacy infrastructure; instead, it is enhancing it intelligently through real-world data and feedback. This measured approach avoids hype, aiming instead for steady improvement in cars and factories alike. It shows how meaningful automation grows through experience, not spectacle, laying groundwork for smarter operations over time.

Advertisement

Looking for the next big thing in Python development? Explore upcoming libraries like PyScript, TensorFlow Quantum, FastAPI 2.0, and more that will redefine how you build and deploy systems in 2025

How accelerated inference using Optimum and Transformers pipelines can significantly improve model speed and efficiency across AI tasks. Learn how to streamline deployment with real-world gains

Curious how to build your first serverless function? Follow this hands-on AWS Lambda tutorial to create, test, and deploy a Python Lambda—from setup to CloudWatch monitoring

Gradio is joining Hugging Face in a move that simplifies machine learning interfaces and model sharing. Discover how this partnership makes AI tools more accessible for developers, educators, and users

How explainable artificial intelligence helps AI and ML engineers build transparent and trustworthy models. Discover practical techniques and challenges of XAI for engineers in real-world applications

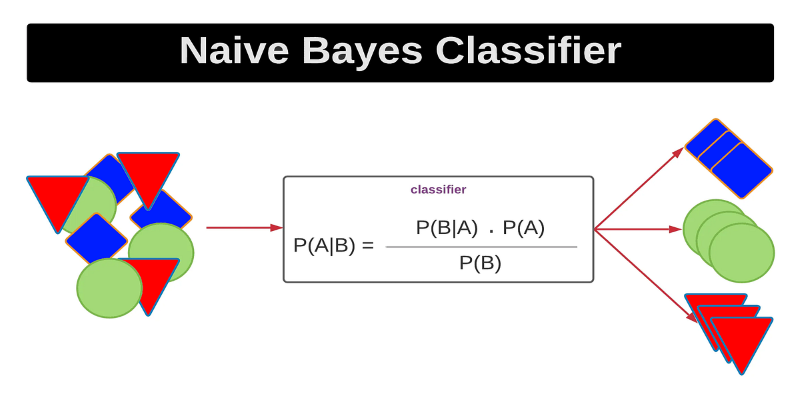

Curious how a simple algorithm can deliver strong ML results with minimal tuning? This beginner’s guide breaks down Naive Bayes—its logic, types, code examples, and where it really shines

How Sempre Health is accelerating its ML roadmap with the help of the Expert Acceleration Program, improving model deployment, patient outcomes, and internal efficiency

Explore Proximal Policy Optimization, a widely-used reinforcement learning algorithm known for its stable performance and simplicity in complex environments like robotics and gaming

Struggling with a small dataset? Learn practical strategies like data augmentation, transfer learning, and model selection to build effective machine learning models even with limited data

Explore how ACID and BASE models shape database reliability, consistency, and scalability. Learn when to prioritize structure versus flexibility in your data systems

The White House has introduced new guidelines to regulate chip licensing and AI systems, aiming to balance innovation with security and transparency in these critical technologies

Wondering how Docker works or why it’s everywhere in devops? Learn how containers simplify app deployment—and how to get started in minutes