Advertisement

Gradio, a lightweight tool that lets developers create simple interfaces for machine learning models, is officially becoming part of Hugging Face. This news is more than a corporate headline. It signals a shift in how people interact with AI tools. Over the past few years, Gradio has quietly become a favourite among researchers, developers, and educators who want to let others try out models without dealing with backend systems. Now, with Hugging Face, the path from building a model to sharing it gets even more direct. Here's a closer look at what this move means.

Gradio took off by offering a quick and simple way to wrap machine learning models in a shareable web interface. With just a few lines of code, developers could set up apps that allowed others to test their models in real-time. No need for frontend development, no server setup—just a quick way to get feedback or showcase a project.

The tool filled a real need. Machine learning had become more open, but many models still lived in notebooks or repos, far from end users. Gradio helped fix that. Suddenly, anyone could try out an image classifier, summarizer, or chatbot through a clean interface. It didn’t require advanced tech knowledge to use or share.

As more people worked with AI tools, Gradio helped bridge the gap between research and experience. It wasn’t just about showing that something worked; it was about letting others try it. That hands-on access is a big part of what made Gradio popular.

Hugging Face is known for its Transformers library, but it has grown far beyond that. It now offers a full platform for hosting, sharing, and exploring AI models and datasets. Hugging Face's community has become a home for open-source machine learning.

Gradio fits into this vision neatly. Thousands of models hosted on Hugging Face already use Gradio-based demos. Making the connection official brings the two tools into better alignment. Together, they help turn static models into interactive apps.

Now, developers who upload models to Hugging Face can create a live interface for them using Gradio without extra setup. This helps reduce friction in the model-sharing process. Instead of separate tools and workflows, it becomes easier to keep everything in one place.

Another benefit is feedback. Gradio demos make it simple to collect user input and see how people interact with models. This feedback loop is helpful for improving accuracy, identifying issues, and guiding future updates. When paired with Hugging Face’s hosting and sharing features, it supports a complete development cycle—from training to testing to tuning.

The collaboration also supports Hugging Face’s broader goal: making machine learning tools more useful and easier to access for more people, not just those with deep technical backgrounds.

The Gradio and Hugging Face partnership points to a broader shift. AI is moving away from closed systems and toward open, testable models that anyone can explore. Making models easier to try, without code or setup, opens the door to new types of users.

This affects how AI gets built and shared. Instead of publishing models and expecting users to figure out how to run them, developers can offer demos from the start. It makes research more transparent and usable. It also helps others build on existing work faster.

In classrooms, Gradio makes it easier to teach machine learning concepts by providing tools that are visual and interactive. For companies, it lowers the cost of early prototyping. For independent creators, it offers a way to test ideas publicly without building full platforms.

The open-source ecosystem benefits, too. As more demos go live, they become examples others can study, learn from, and improve upon. Model development turns into a shared process, not just a finished product.

Hugging Face and Gradio also support different types of learning. Some people learn by reading code; others by trying things out. When tools support both, they make machine learning more approachable.

Now that Gradio is part of Hugging Face, deeper integration is likely. Developers may see better ways to manage demos, including auto-generated interfaces or one-click publishing. There’s potential for tighter syncing between models and interfaces, reducing manual work when models are updated.

The Hugging Face platform already includes Spaces, which hosts live demos. Gradio’s role in powering these apps may expand, making Spaces easier to use and manage. The workflow from model creation to deployment becomes faster and less fragmented.

For new developers, this simplifies the learning curve. You won’t need to stitch together tools or write extra code just to show your work. That lowers barriers and encourages more people to build and share.

From a community perspective, this change is a boost. People can find, test, and share working AI apps more easily. As more developers put up live demos, it adds value to Hugging Face’s platform, making it a one-stop place for discovery and experimentation.

It’s also good news for educators. Students can now build and interact with models through a visual interface, even if they’re just starting out. This helps reinforce concepts with real-world applications. And for teams working on ML-powered products, quick Gradio demos can make collaboration easier across roles.

Gradio's original focus on usability won't get lost. If anything, Hugging Face's resources and user base will help that mission expand. As more people enter the field, tools that reduce technical hurdles will remain essential.

Gradio joining Hugging Face brings together two widely used tools in open-source AI, making it easier to move from building models to sharing them with real users. Developers can work faster, educators get better teaching tools, and anyone curious about AI gains access without needing to code. This integration streamlines the process, encourages collaboration, and supports learning. Hugging Face now becomes more than a model hub—it offers a full environment for creating and testing interactive machine learning applications.

Advertisement

Confused about DAO and DTO in Python? Learn how these simple patterns can clean up your code, reduce duplication, and improve long-term maintainability

The White House has introduced new guidelines to regulate chip licensing and AI systems, aiming to balance innovation with security and transparency in these critical technologies

Could one form field expose your entire database? Learn how SQL injection attacks work, what damage they cause, and how to stop them—before it’s too late

How are conversational chatbots in the Omniverse helping small businesses stay competitive? Learn how AI tools are shaping customer service, marketing, and operations without breaking the budget

How TAPEX uses synthetic data for efficient table pre-training without relying on real-world datasets. Learn how this model reshapes how AI understands structured data

Learn how to simplify machine learning integration using Google’s Mediapipe Tasks API. Discover its key features, supported tasks, and step-by-step guidance for building real-time ML applications

How to train large-scale language models using Megatron-LM with step-by-step guidance on setup, data preparation, and distributed training. Ideal for developers and researchers working on scalable NLP systems

Prepare for your Snowflake interview with key questions and expert answers covering Snowflake architecture, virtual warehouses, time travel, micro-partitions, concurrency, and more

Explore how ACID and BASE models shape database reliability, consistency, and scalability. Learn when to prioritize structure versus flexibility in your data systems

Discover how Google BigQuery revolutionizes data analytics with its serverless architecture, fast performance, and versatile features

How BERT, a state of the art NLP model developed by Google, changed language understanding by using deep context and bidirectional learning to improve natural language tasks

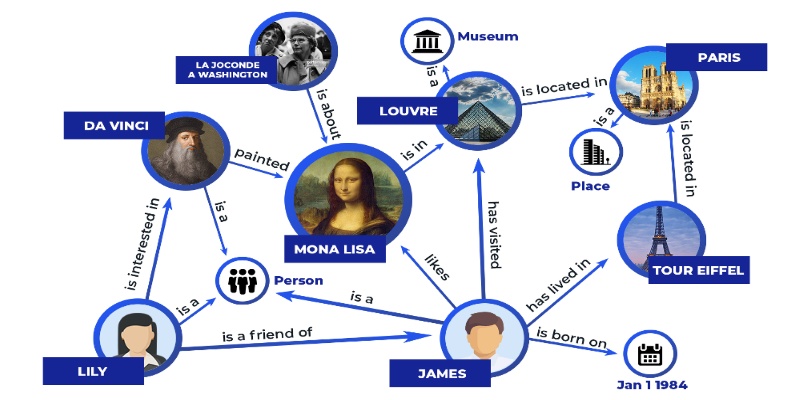

Discover how knowledge graphs work, why companies like Google and Amazon use them, and how they turn raw data into connected, intelligent systems that power search, recommendations, and discovery