Advertisement

Snowflake has carved out a serious space for itself in the world of data warehousing, and that means if you’re prepping for an interview, you better show up sharp. Whether you're eyeing a data engineering role, a data analyst seat, or even something more architecture-heavy, you’re likely to face some curveballs around Snowflake's unique setup. It isn’t just another cloud database — it’s different, and your answers should reflect that.

Let's cut the small talk and get to what really matters: the questions that arise, the thinking behind them, and how to respond without stumbling.

Start with this: most traditional data warehouses struggle to separate compute from storage. That means when your queries get heavier, the system can start choking. Snowflake dodges this issue. It splits storage and compute into separate layers. That alone makes it easier to scale things up without dragging performance down.

And then there’s the multi-cluster architecture. Snowflake allows for automatic scaling of compute clusters to handle concurrency. Say goodbye to queuing or slowdowns when multiple teams query data simultaneously.

Also worth mentioning? It’s a fully-managed service. No hardware to configure, no tuning nightmares. The whole setup runs on AWS, Azure, or GCP — your pick.

A virtual warehouse is Snowflake’s term for the compute layer. Think of it as a bundle of resources that processes your SQL queries and data-loading jobs.

It doesn’t store data — it only handles the grunt work. The real kicker? You can spin one up, run your job, then suspend it. Billing stops the second it’s idle. That flexibility is a big reason Snowflake’s cost model makes sense for many teams.

Also, you can set up multiple virtual warehouses on the same data without stepping on each other’s toes. Devs get their warehouse. Analysts get theirs. Nobody waits on anyone.

This one’s all about peace of mind. Time Travel lets you access historical data — not just backups, but actual snapshots of data as it existed at specific times. You can restore tables, query previous states, or recover from accidental deletions.

By default, Snowflake offers 1-day retention, but it can go up to 90 days on the Enterprise Edition. It's especially handy during testing, migration, or when someone makes an "oops" moment on a production table.

If you want to stand out, talk about micro-partitions. Snowflake stores table data in these compressed, columnar blocks. Each micro-partition is automatically indexed with metadata, like min/max values for each column.

Here’s why it matters: this design enables pruning. So, when you query a table, Snowflake doesn’t scan every row. It skips entire blocks that aren’t relevant. That’s how Snowflake keeps response times low even on massive datasets.

You don’t manage micro-partitions manually, and you don’t need to. It’s baked into the engine.

Unlike some systems that need you to flatten or pre-process semi-structured data, Snowflake reads and queries it natively. You store it in a VARIANT column, and from there, you can use dot notation or the FLATTEN() function to access nested values.

Say you have logs in JSON format — no transformation needed. Just stick them in a table, and you’re ready to run SELECT queries on specific fields. You can even join this data with structured tables without much fuss.

Snowflake supports several flavors, and each comes with its own use case:

Permanent tables: These stick around until you drop them. Standard option for production data.

Transient tables: These don’t support Time Travel and cost less to store — good for temporary staging where rollback isn’t a concern.

Temporary tables: Tied to your session. Once you disconnect, they’re gone.

External tables: These let you query files stored in cloud storage (like S3) without loading them into Snowflake.

Naming each correctly in the DDL helps Snowflake manage storage and costs behind the scenes.

Concurrency used to be a pain point in traditional warehouses. Too many users running heavy queries? Everything slows down, not in Snowflake.

Thanks to its multi-cluster compute model, each virtual warehouse works independently. If one gets overloaded, Snowflake can add clusters behind the scenes (if auto-scaling is enabled). So users don’t get blocked, and queries keep flowing.

There’s no resource contention between virtual warehouses either. That means devs can run back-end scripts while analysts dig into dashboards — all without tripping over each other.

Snowflake has three levels of caching:

Result Cache: Stores results of previously executed queries. If you rerun a query and nothing has changed, Snowflake returns the result instantly — no compute time, no cost.

Metadata Cache: Tracks schema info and helps optimize query planning.

Data Cache: Happens at the virtual warehouse level. If data was recently queried, it might be stored in memory and accessed faster the next time.

Knowing how each cache behaves can help you design more efficient pipelines and avoid unnecessary compute usage.

When you’re walking into a Snowflake interview, don’t just memorize definitions. Understand how the system thinks — and why it works the way it does. That’s what interviewers are watching for. Real-world experience counts more than buzzwords. And if you've played around with Snowflake, especially with its query planner or semi-structured data features, talk about it. Bring up what you’ve built, what broke, and how you fixed it. Even small-scale projects can show how well you grasp the platform. That might be the difference between “Thanks for coming in” and “When can you start?”

Advertisement

Explore how ACID and BASE models shape database reliability, consistency, and scalability. Learn when to prioritize structure versus flexibility in your data systems

Confused about where your data comes from? Discover how data lineage tracks every step of your data’s journey—from origin to dashboard—so teams can troubleshoot fast and build trust in every number

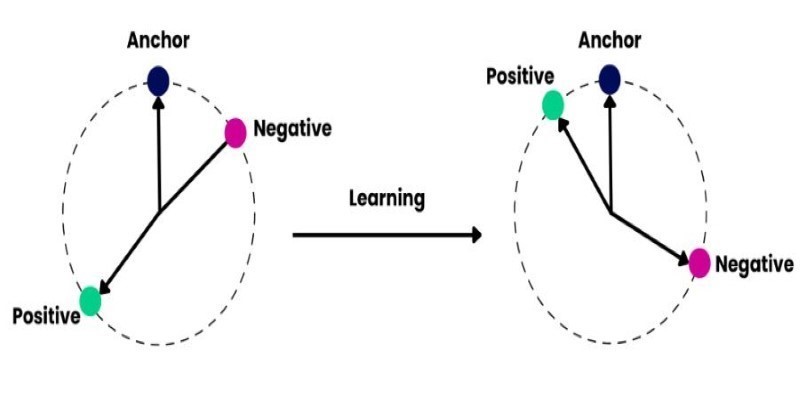

How to train and fine-tune sentence transformers to create high-performing NLP models tailored to your data. Understand the tools, methods, and strategies to make the most of sentence embedding models

Learn how to create a Telegram bot using Python with this clear, step-by-step guide. From getting your token to writing commands and deploying your bot, it's all here

How accelerated inference using Optimum and Transformers pipelines can significantly improve model speed and efficiency across AI tasks. Learn how to streamline deployment with real-world gains

Confused about DAO and DTO in Python? Learn how these simple patterns can clean up your code, reduce duplication, and improve long-term maintainability

How BERT, a state of the art NLP model developed by Google, changed language understanding by using deep context and bidirectional learning to improve natural language tasks

How TAPEX uses synthetic data for efficient table pre-training without relying on real-world datasets. Learn how this model reshapes how AI understands structured data

What does GM’s latest partnership with Nvidia mean for robotics and automation? Discover how Nvidia AI is helping GM push into self-driving cars and smart factories after GTC 2025

The Hugging Face Fellowship Program offers early-career developers paid opportunities, mentorship, and real project work to help them grow within the inclusive AI community

The White House has introduced new guidelines to regulate chip licensing and AI systems, aiming to balance innovation with security and transparency in these critical technologies

Learn how Redis OM for Python transforms Redis into a model-driven, queryable data layer with real-time performance. Define, store, and query structured data easily—no raw commands needed