Advertisement

When most people picture summer, they think of sunshine, travel, or taking it slow. At Hugging Face, the season brings a different kind of energy. It’s a time when collaboration picks up, more voices join the conversation, and ideas move quickly. New contributors come on board, models get refined, and community projects grow. The vibe isn’t corporate or formal—it’s relaxed but focused. Summer becomes a season of building things that matter, fueled by curiosity, openness, and the kind of teamwork that makes technical work feel personal.

Hugging Face has built its identity around openness and community. During the summer, these values become more visible. Many student developers and early-career contributors join in through internships or fellowships, and their work doesn’t get tucked away in the background. They're trusted with tasks that shape the platform—whether that’s model fine-tuning, improving tokenizers, or contributing to documentation.

Much of this work happens in public, where it can be tested, reviewed, and improved by others. Code commits, pull requests, and issue threads show a surge in activity. The feedback loop is fast, and contributors are encouraged to learn by doing. Summer’s less rigid pace makes it easier for people to explore, fail a bit, and get better.

But contributing to Hugging Face isn’t just about writing code. People help by writing tutorials, updating datasets, testing models, or improving UX on Spaces. It’s an environment that values participation from different skill sets and backgrounds. Community calls, informal demo sessions, and GitHub discussions keep things interactive and open. For many contributors, summer work turns into long-term involvement.

The summer months tend to be good timing for updates and releases. Research teams often wrap up work in the spring, and results make their way into the Hugging Face ecosystem soon after. Whether it’s through academic partnerships or independent work, Hugging Face integrates new ideas quickly.

The Transformers library is one area that often sees major updates in summer. These can include support for new architectures, more efficient ways to run existing models, or extended functionality for multilingual tasks. These changes are often driven by community suggestions and pull requests, not just internal roadmaps.

At the same time, the Hugging Face Hub keeps growing. Developers and researchers upload models, datasets, and checkpoints on a daily basis. Model Cards—short guides that explain how models work and what they're good for—get reviewed and refined. This helps new users understand what they're downloading and using.

Spaces, Hugging Face's platform for hosting live ML apps, also sees more activity. These apps range from playful projects, such as poetry bots, to practical ones, like document summarizers. With fewer deadlines and more active contributors, the summer season becomes ideal for testing unusual ideas and making them public.

With team members spread across many countries, Hugging Face doesn’t follow a single routine. Summer means different things depending on where you are. For some, it’s a chance to step back and focus on creative work. For others, it’s their first time contributing to a major open-source project.

People often step outside their usual roles. Someone working on infrastructure might spend a few weeks testing new multimodal features. Designers may rethink the look of Spaces or propose ways to simplify workflows. Interns often take on real problems and solve them in public, getting feedback and encouragement along the way.

Without heavy scheduling or strict cycles, collaboration becomes easier. Conversations happen in GitHub threads, Discord chats, and small team check-ins. Long-time contributors often mentor newer ones without needing formal assignments. The culture encourages learning and curiosity over hierarchy.

Even with time off and travel, progress continues. Someone’s always online in a different time zone, keeping the rhythm steady. The flexibility helps people stay productive while still enjoying the season. It’s this shared understanding—of both work and downtime—that makes the summer feel balanced.

The effects of a Hugging Face summer aren’t limited to internal work. The wider AI community feels the impact. Public projects, open models, and learning resources reach more people. Hackathons often spring up, inviting beginners and experts to build tools using Hugging Face libraries. For many, it’s their first hands-on experience with training or deploying a model.

Webinars, tutorials, and workshops increase during this season. Topics range from retrieval-augmented generation to instruction tuning. Hugging Face often works with universities, labs, and nonprofits to open these sessions to more learners. The relaxed pace of summer helps more people find the time to attend, ask questions, and try things out.

Ethical development gets more attention during this time, too. With fewer deadlines, there's space to look at bias audits, sustainability concerns, and how models are used in real applications. Documentation efforts, transparency reports, and community discussions about fair AI have become more active.

The AI community plays a bigger role during this time. Hugging Face doesn't just build tools—it helps connect people. With an open and collaborative approach, it creates space for everyone, from students to researchers, to get involved. Summer makes it easier for more people to take part and explore what they can build together.

Summer at Hugging Face blends progress with a relaxed pace, creating an atmosphere where people can experiment, learn, and contribute in meaningful ways. It’s a time when open-source work feels more collaborative, and the AI community becomes more accessible. From interns writing code to researchers refining models, everyone shares a common goal: building tools that matter. The rhythm may slow slightly, but the momentum continues through thoughtful projects and shared curiosity. Instead of big launches or fanfare, summer is marked by steady, human-focused development. It’s not just about technology—it’s about people working together, one contribution at a time.

Advertisement

What does GM’s latest partnership with Nvidia mean for robotics and automation? Discover how Nvidia AI is helping GM push into self-driving cars and smart factories after GTC 2025

Improve automatic speech recognition accuracy by boosting Wav2Vec2 with an n-gram language model using Transformers and pyctcdecode. Learn how shallow fusion enhances transcription quality

Gradio is joining Hugging Face in a move that simplifies machine learning interfaces and model sharing. Discover how this partnership makes AI tools more accessible for developers, educators, and users

How accelerated inference using Optimum and Transformers pipelines can significantly improve model speed and efficiency across AI tasks. Learn how to streamline deployment with real-world gains

Confused about DAO and DTO in Python? Learn how these simple patterns can clean up your code, reduce duplication, and improve long-term maintainability

Looking for the next big thing in Python development? Explore upcoming libraries like PyScript, TensorFlow Quantum, FastAPI 2.0, and more that will redefine how you build and deploy systems in 2025

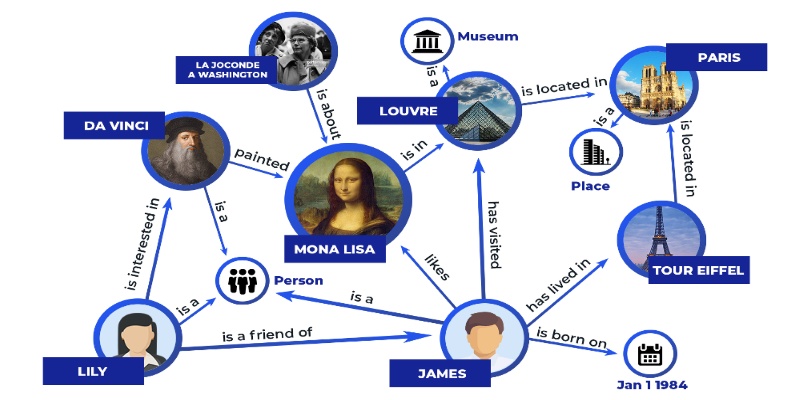

Discover how knowledge graphs work, why companies like Google and Amazon use them, and how they turn raw data into connected, intelligent systems that power search, recommendations, and discovery

Learn how to simplify machine learning integration using Google’s Mediapipe Tasks API. Discover its key features, supported tasks, and step-by-step guidance for building real-time ML applications

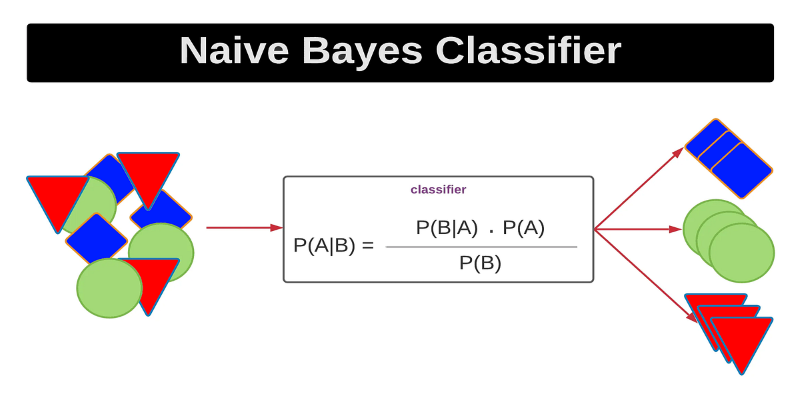

Curious how a simple algorithm can deliver strong ML results with minimal tuning? This beginner’s guide breaks down Naive Bayes—its logic, types, code examples, and where it really shines

Discover how Google BigQuery revolutionizes data analytics with its serverless architecture, fast performance, and versatile features

How Summer at Hugging Face brings new contributors, open-source collaboration, and creative model development to life while energizing the AI community worldwide

Struggling with a small dataset? Learn practical strategies like data augmentation, transfer learning, and model selection to build effective machine learning models even with limited data